Smart Agent Assist: Leveraging LLMs for Enhancing Customer Support Efficiency and Reducing Operational Costs

The Customer

A prominent UK retail company, renowned for its vast and loyal customer base, is committed to delivering exceptional customer experiences. The dedicated customer support services enhance every customer interaction, ensuring a seamless and enjoyable journey. The company strives to promptly resolve issues and provide the necessary assistance for a truly satisfying experience.

The Challenge

The client faced significant operational challenges in their contact center, including high Average Handling Time, low First Time Resolution rates, and elevated Error Rates during live calls. Additionally, they struggled with prolonged After Call work, difficulties related to accents, and non-compliance in handling specific vulnerable customer cases. These complexities underscored the need for robust solutions to optimize efficiency and enhance customer service delivery. The client believed that integrating real-time Speech-to-Text transcription and knowledge assistance was key to addressing these issues, but they lacked the expertise to execute this endeavor effectively.

The Solution

To address the challenges faced by the client in their contact center operations, EXL implemented a comprehensive AI-driven solution designed to optimize efficiency and enhance customer service delivery. A rigorous evaluation of various Language Model (LLM) performances was carried out, carefully selecting the most suitable model based on critical factors such as model effectiveness, architecture scalability for longer context lengths, efficient KV cache memory management, and adherence to customer guidelines regarding open-source versus closed-source solutions. The chosen LLM, equipped with advanced capabilities for instruction following, laid a solid foundation for addressing the client’s requirements.

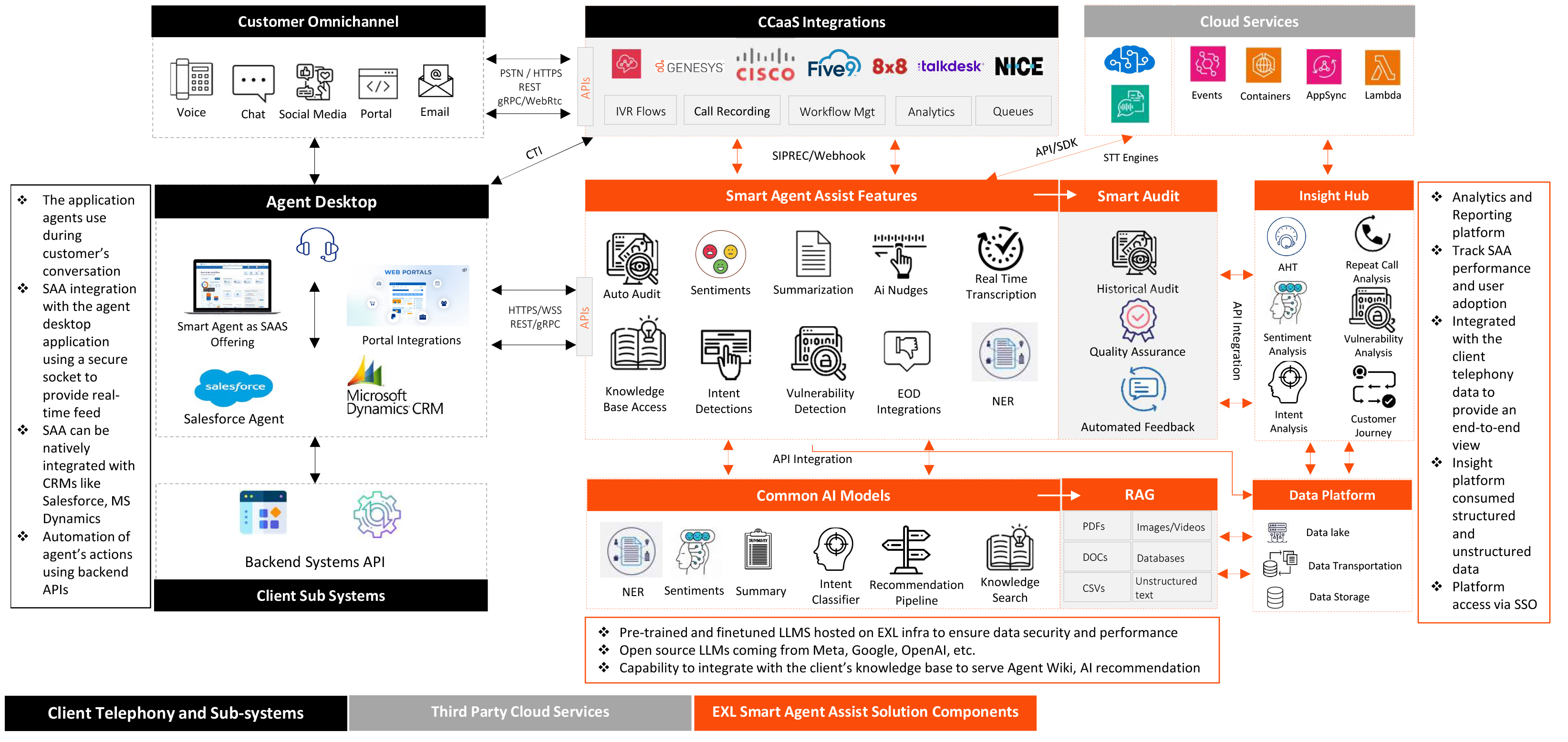

In terms of solution architecture, we devised a robust framework consisting of multiple integrated modules. The integration process involved a seamless transition from the client’s existing systems to the sophisticated GenAI model within EXL’s cloud environment.

Module 1

Facilitated seamless integration with the client’s telephony platform, Genesys, ensuring compatibility and real-time data exchange.

Module 2

Deployed a sophisticated Gen AI-ML model within EXL’s cloud environment, equipped to deliver real-time nudges and extract relevant entities from ongoing conversations.

Module 3

Focused on enhancing speech-to-text capabilities through custom model training, enabling the conversion of live audio streams into text format with high accuracy and using AI to summarize calls almost in real-time.

Live calls were seamlessly captured from the telephony platform using Audio Hook/SIPREC connections and processed within EXL’s AWS infrastructure. Advanced services, such as Amazon Kinesis for data streaming and custom AI models tailored to specific customer needs—including entity extraction, vulnerability detection, and call summarization—ensured agents received pertinent information in real time via a unified agent desktop interface. The implementation of EXL’s AI-driven solution, underpinned by a carefully selected and highly capable LLM, successfully addressed the challenges in the client’s contact center operations. The result was reduced Average handling times, improved first-time resolution rates, and a more efficient, responsive, and customer-centric service that not only met but exceeded the client’s expectations.

The Solution Architecture

The solution architecture employs a robust suite of AWS services to deliver a scalable and efficient Smart Agent Assist platform. Amazon DynamoDB serves as the core data store, providing fast and reliable access to structured data. Amazon SageMaker facilitates the hosting and management of LLMs, ensuring scalable AI deployment. AWS Lambda enables serverless computing for real-time event processing and integration tasks, while Amazon Kinesis streams data from Genesys, efficiently handling high-throughput data streams. AWS Cognito provides secure token-based authentication, supporting user identity and access control. Finally, AWS ECS Fargate deploys AI services through containerized applications, ensuring high availability with minimal infrastructure management. Collectively, these services form a secure, scalable, and high-performing solution.

Smart Agent Assist: Technical Architecture

The Business Result

The LLM implementation achieved significant operational efficiencies

- Reduced summary generation time to 1-2 seconds

- Contributed to a 12-15% reduction in average handling time, enhanced overall resource utilization and minimized after-call work.

- Vulnerability identification accuracy reached 85%, signifying proactive customer support and risk management.

- 20% decrease in the cost to serve, underscoring the solution’s cost-effectiveness.

- 10% increase in CSAT scores, reflecting enhanced customer satisfaction and loyalty.

- Streamlined live agent assistance, minimizing customer effort during interactions.

- Achieved over 85% accuracy in entity extraction.